cogvlm

Maintainer: cjwbw

536

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | View on Arxiv |

Get summaries of the top AI models delivered straight to your inbox:

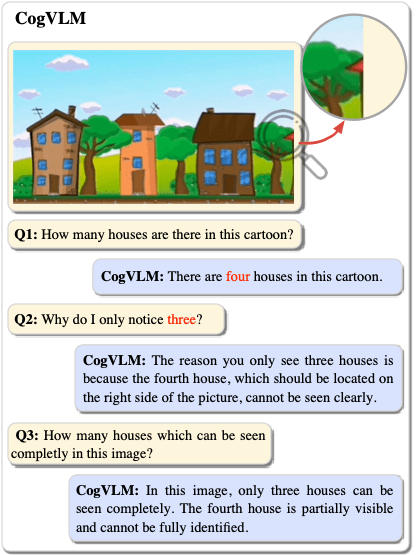

Model overview

CogVLM is a powerful open-source visual language model developed by the maintainer cjwbw. It comprises a vision transformer encoder, an MLP adapter, a pretrained large language model (GPT), and a visual expert module. CogVLM-17B has 10 billion vision parameters and 7 billion language parameters, and it achieves state-of-the-art performance on 10 classic cross-modal benchmarks, including NoCaps, Flicker30k captioning, RefCOCO, and more. It can also engage in conversational interactions about images.

Similar models include segmind-vega, an open-source distilled Stable Diffusion model with 100% speedup, animagine-xl-3.1, an anime-themed text-to-image Stable Diffusion model, cog-a1111-ui, a collection of anime Stable Diffusion models, and videocrafter, a text-to-video and image-to-video generation and editing model.

Model inputs and outputs

CogVLM is a powerful visual language model that can accept both text and image inputs. It can generate detailed image descriptions, answer various types of visual questions, and even engage in multi-turn conversations about images.

Inputs

- Image: The input image that

CogVLMwill process and generate a response for. - Query: The text prompt or question that

CogVLMwill use to generate a response related to the input image.

Outputs

- Text response: The generated text response from

CogVLMbased on the input image and query.

Capabilities

CogVLM is capable of accurately describing images in detail with very few hallucinations. It can understand and answer various types of visual questions, and it has a visual grounding version that can ground the generated text to specific regions of the input image. CogVLM sometimes captures more detailed content than GPT-4V(ision).

What can I use it for?

With its powerful visual and language understanding capabilities, CogVLM can be used for a variety of applications, such as image captioning, visual question answering, image-based dialogue systems, and more. Developers and researchers can leverage CogVLM to build advanced multimodal AI systems that can effectively process and understand both visual and textual information.

Things to try

One interesting aspect of CogVLM is its ability to engage in multi-turn conversations about images. You can try providing a series of related queries about a single image and observe how the model responds and maintains context throughout the conversation. Additionally, you can experiment with different prompting strategies to see how CogVLM performs on various visual understanding tasks, such as detailed image description, visual reasoning, and visual grounding.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

cogvlm

9

cogvlm is a powerful open-source visual language model (VLM) developed by the team at Tsinghua University. Compared to similar visual-language models like cogvlm and llava-13b, cogvlm stands out with its state-of-the-art performance on a wide range of cross-modal benchmarks, including NoCaps, Flickr30k captioning, and various visual question answering tasks. The model has 10 billion visual parameters and 7 billion language parameters, allowing it to understand and generate detailed descriptions of images. Unlike some previous VLMs that struggled with hallucination, cogvlm is known for its ability to provide accurate and factual information about the visual content. Model Inputs and Outputs Inputs Image**: An image in a standard image format (e.g. JPEG, PNG) provided as a URL. Prompt**: A text prompt describing the task or question to be answered about the image. Outputs Output**: An array of strings, where each string represents the model's response to the provided prompt and image. Capabilities cogvlm excels at a variety of visual understanding and reasoning tasks. It can provide detailed descriptions of images, answer complex visual questions, and even perform visual grounding - identifying and localizing specific objects or elements in an image based on a textual description. For example, when shown an image of a park scene and asked "Can you describe what you see in the image?", cogvlm might respond with a detailed paragraph capturing the key elements, such as the lush green grass, the winding gravel path, the trees in the distance, and the clear blue sky overhead. Similarly, if presented with an image of a kitchen and the prompt "Where is the microwave located in the image?", cogvlm would be able to identify the microwave's location and provide the precise bounding box coordinates. What Can I Use It For? The broad capabilities of cogvlm make it a versatile tool for a wide range of applications. Developers and researchers could leverage the model for tasks such as: Automated image captioning and visual question answering for media or educational content Visual interface agents that can understand and interact with graphical user interfaces Multimodal search and retrieval systems that can match images to relevant textual information Visual data analysis and reporting, where the model can extract insights from visual data By tapping into cogvlm's powerful visual understanding, these applications can offer more natural and intuitive experiences for users. Things to Try One interesting way to explore cogvlm's capabilities is to try various types of visual prompts and see how the model responds. For example, you could provide complex scenes with multiple objects and ask the model to identify and localize specific elements. Or you could give it abstract or artistic images and see how it interprets and describes the visual content. Another interesting avenue to explore is the model's ability to handle visual grounding tasks. By providing textual descriptions of objects or elements in an image, you can test how accurately cogvlm can pinpoint their locations and extents. Ultimately, the breadth of cogvlm's visual understanding makes it a valuable tool for a wide range of applications. As you experiment with the model, be sure to share your findings and insights with the broader AI community.

Updated Invalid Date

cogagent-chat

2

cogagent-chat is a visual language model created by cjwbw that can generate textual descriptions for images. It is similar to other powerful open-source visual language models like cogvlm and models for screenshot parsing like pix2struct. The model is also related to large text-to-image models like stable-diffusion and can be used for tasks like controlling vision-language models for universal image restoration with models like daclip-uir. Model inputs and outputs The cogagent-chat model takes two inputs: an image and a query. The image is the visual input that the model will analyze, and the query is the natural language prompt that the model will use to generate a textual description of the image. The model also takes a temperature parameter that adjusts the randomness of the textual outputs, with higher values being more random and lower values being more deterministic. Inputs Image**: The input image to be analyzed Query**: The natural language prompt used to generate the textual description Temperature**: Adjusts randomness of textual outputs, with higher values being more random and lower values being more deterministic Outputs Output**: The textual description of the input image generated by the model Capabilities cogagent-chat is a powerful visual language model that can generate detailed and coherent textual descriptions of images based on a provided query. This can be useful for a variety of applications, such as image captioning, visual question answering, and automated image analysis. What can I use it for? You can use cogagent-chat for a variety of projects that involve analyzing and describing images. For example, you could use it to build a tool for automatically generating image captions for social media posts, or to create a visual search engine that can retrieve relevant images based on natural language queries. The model could also be integrated into chatbots or other conversational AI systems to provide more intelligent and visually-aware responses. Things to try One interesting thing to try with cogagent-chat is using it to generate descriptions of complex or abstract images, such as digital artwork or visualizations. The model's ability to understand and interpret visual information could be used to provide unique and insightful commentary on these types of images. Additionally, you could experiment with the temperature parameter to see how it affects the creativity and diversity of the model's textual outputs.

Updated Invalid Date

stable-diffusion

107.9K

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. Developed by Stability AI, it is an impressive AI model that can create stunning visuals from simple text prompts. The model has several versions, with each newer version being trained for longer and producing higher-quality images than the previous ones. The main advantage of Stable Diffusion is its ability to generate highly detailed and realistic images from a wide range of textual descriptions. This makes it a powerful tool for creative applications, allowing users to visualize their ideas and concepts in a photorealistic way. The model has been trained on a large and diverse dataset, enabling it to handle a broad spectrum of subjects and styles. Model inputs and outputs Inputs Prompt**: The text prompt that describes the desired image. This can be a simple description or a more detailed, creative prompt. Seed**: An optional random seed value to control the randomness of the image generation process. Width and Height**: The desired dimensions of the generated image, which must be multiples of 64. Scheduler**: The algorithm used to generate the image, with options like DPMSolverMultistep. Num Outputs**: The number of images to generate (up to 4). Guidance Scale**: The scale for classifier-free guidance, which controls the trade-off between image quality and faithfulness to the input prompt. Negative Prompt**: Text that specifies things the model should avoid including in the generated image. Num Inference Steps**: The number of denoising steps to perform during the image generation process. Outputs Array of image URLs**: The generated images are returned as an array of URLs pointing to the created images. Capabilities Stable Diffusion is capable of generating a wide variety of photorealistic images from text prompts. It can create images of people, animals, landscapes, architecture, and more, with a high level of detail and accuracy. The model is particularly skilled at rendering complex scenes and capturing the essence of the input prompt. One of the key strengths of Stable Diffusion is its ability to handle diverse prompts, from simple descriptions to more creative and imaginative ideas. The model can generate images of fantastical creatures, surreal landscapes, and even abstract concepts with impressive results. What can I use it for? Stable Diffusion can be used for a variety of creative applications, such as: Visualizing ideas and concepts for art, design, or storytelling Generating images for use in marketing, advertising, or social media Aiding in the development of games, movies, or other visual media Exploring and experimenting with new ideas and artistic styles The model's versatility and high-quality output make it a valuable tool for anyone looking to bring their ideas to life through visual art. By combining the power of AI with human creativity, Stable Diffusion opens up new possibilities for visual expression and innovation. Things to try One interesting aspect of Stable Diffusion is its ability to generate images with a high level of detail and realism. Users can experiment with prompts that combine specific elements, such as "a steam-powered robot exploring a lush, alien jungle," to see how the model handles complex and imaginative scenes. Additionally, the model's support for different image sizes and resolutions allows users to explore the limits of its capabilities. By generating images at various scales, users can see how the model handles the level of detail and complexity required for different use cases, such as high-resolution artwork or smaller social media graphics. Overall, Stable Diffusion is a powerful and versatile AI model that offers endless possibilities for creative expression and exploration. By experimenting with different prompts, settings, and output formats, users can unlock the full potential of this cutting-edge text-to-image technology.

Updated Invalid Date

segmind-vega

1

segmind-vega is an open-source AI model developed by cjwbw that is a distilled and accelerated version of Stable Diffusion, achieving a 100% speedup. It is similar to other AI models created by cjwbw, such as animagine-xl-3.1, tokenflow, and supir, as well as the cog-a1111-ui model created by brewwh. Model inputs and outputs segmind-vega is a text-to-image AI model that takes a text prompt as input and generates a corresponding image. The input prompt can include details about the desired content, style, and other characteristics of the generated image. The model also accepts a negative prompt, which specifies elements that should not be included in the output. Additionally, users can set a random seed value to control the stochastic nature of the generation process. Inputs Prompt**: The text prompt describing the desired image Negative Prompt**: Specifications for elements that should not be included in the output Seed**: A random seed value to control the stochastic generation process Outputs Output Image**: The generated image corresponding to the input prompt Capabilities segmind-vega is capable of generating a wide variety of photorealistic and imaginative images based on the provided text prompts. The model has been optimized for speed, allowing it to generate images more quickly than the original Stable Diffusion model. What can I use it for? With segmind-vega, you can create custom images for a variety of applications, such as social media content, marketing materials, product visualizations, and more. The model's speed and flexibility make it a useful tool for rapid prototyping and experimentation. You can also explore the model's capabilities by trying different prompts and comparing the results to those of similar models like animagine-xl-3.1 and tokenflow. Things to try One interesting aspect of segmind-vega is its ability to generate images with consistent styles and characteristics across multiple prompts. By experimenting with different prompts and studying the model's outputs, you can gain insights into how it understands and represents visual concepts. This can be useful for a variety of applications, such as the development of novel AI-powered creative tools or the exploration of the relationships between language and visual perception.

Updated Invalid Date